Institute For Ethical Hacking Course and Ethical Hacking Training in Pune – India

Extreme Hacking | Sadik Shaikh | Cyber Suraksha Abhiyan

Credits: The Register

Sneezes and homophones – words that sound like other words – are tripping smart speakers into allowing strangers to hear recordings of your private conversations.

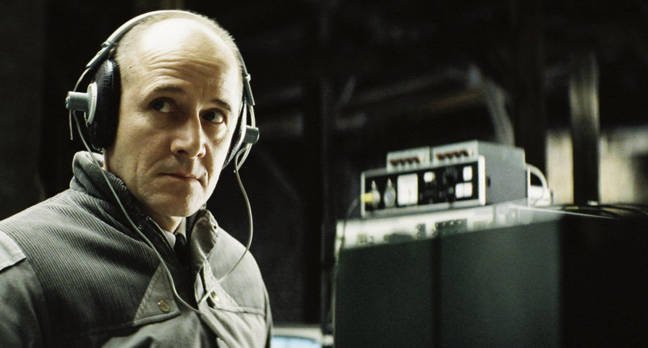

These strangers live an eerie existence, a little like the Stasi agent in the movie The Lives of Others. They’re contracted to work for the device manufacturer – machine learning data analysts – and the snippets they hear were never intended for third-party consumption.

Bloomberg has unearthed the secrets of Amazon’s analysts in Romania, reporting on their work for the first time. “A global team reviews audio clips in an effort to help the voice-activated assistant respond to commands,” the newswire wrote. Amazon has not previously acknowledged the existence of this process, or the level of human intervention.

The Register asked Apple, Microsoft and Google, which all have smart search assistants, for a statement on the extent of human involvement in reviewing these recordings – and their retention policies.

None would disclose the information by the time of publication.

What’s it for?

I wrote a thing! Short version: don’t surveil people; don’t turn students into spies; don’t divorce privacy from its effects on vulnerable populations. https://t.co/d4EsH1Q3fD

— One Ring (doorbell) to surveil them all… (@hypervisible) March 25, 2019

As the Financial Times explained this week (paywalled): “Supervised learning requires what is known as ‘human intelligence’ to train algorithms, which very often means cheap labour in the developing world.”

Amazon sends fragments of recordings to the training team to improve Alexa’s speech recognition. Thousands are employed to listen to Alexa recordings in Boston, India and Romania.

Alexa only responds to a wake word, according to its maker. However, because Alexa can misinterpret sounds and homophones as its default wake word, the team was able to hear audio never intended for transmission to Amazon. The team received recordings of embarrassing and disturbing material, including at least one sexual assault, Bloomberg reported.

Amazon encourages staff disturbed by what they hear to console each other, but didn’t elaborate on whether counseling was available.

The retention of the audio files is purportedly voluntary, but this is far from clear in the information Amazon gives users. Amazon and Google allow the voice recordings to be deleted from your account, for example, but this may not be permanent: the recording could continue to be used for training purposes (Google’s explanation can be found here).

A personal history shows Amazon continues to hold audio files of data “not intended for Alexa”.

As mentioned, we have yet to receive a formal statement from any of the three.

Privacy campaigners cite two areas of concern. Voice platforms could offer an “auto purge” function deleting recordings older than a day, or 30 days. And they could ensure, once deleted, a file is gone forever. Both merit some formal legal clarity.

www.extremehacking.org

Sadik Shaikh | Cyber Suraksha Abhiyan, Ethical Hacking Training Institute, CEHv10,CHFI,ECSAv10,CAST,ENSA, CCNA, CCNA SECURITY,MCITP,RHCE,CHECKPOINT, ASA FIREWALL,VMWARE,CLOUD,ANDROID,IPHONE,NETWORKING HARDWARE,TRAINING INSTITUTE IN PUNE, Certified Ethical Hacking,Center For Advanced Security Training in India, ceh v10 course in Pune-India, ceh certification in pune-India, ceh v10 training in Pune-India, Ethical Hacking Course in Pune-India